Discovering a Good Site Name with Mechanical Turk and AYTM.com

Inspired by a post from Software by Rob [1] and in turn this Pixlin [2] post from 2007, I decided to give Mechanical Turk a whirl to help me come up with a product name. I'm going to talk about my experience and how I actually went about doing this.

So the general idea is this: to come up with a memorable name for a site, I have two options:

- Get out a notepad and try to come up with 3-4 names on the spot, and keep the notepad near me for a couple weeks while I come up with more names, or

- Get a large number of people to come up a handful of names on the spot, each.

Given that I don't bill myself as a good name-come-up-with-er, the second option seemed like the clear choice. So what I did next I did in two phases.

Phase 1: Fan Out

To improve my odds of chancing across a good name, I'd have to collect a lot of potential names. Mechanical Turk lets you farm out work to people easily and cheaply, so I thought I'd go with them.

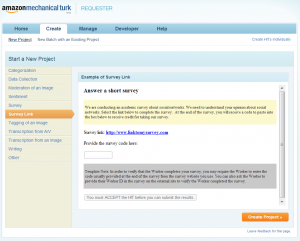

Create a "Survey Link"-type Project

When you create a Turk project as a requester, you're presented with a number of templates. The Survey Link page is a good starting point.

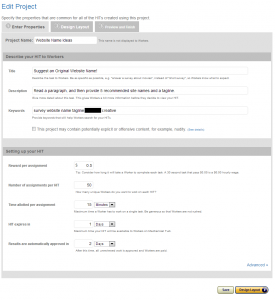

The next screen you'll see is the Edit Project - Enter Properties page.

The first thing you might notice is the rate might seem a bit high. However, this is a creative-type question and not one of a big batch (e.g. thousands of HITs with the same format) that people can just plow through -- this actually requires carefully reading the directions and prompts. Additionally, I'll be restricting my users to people from the United States, since only they have the context to actually answer the question I'll be asking. So given that I'm guessing the survey will take 5 minutes and a fair rate for this is ~$7-8/hr (since we're being cheap bastards here), $0.50 seems like a good starting point.

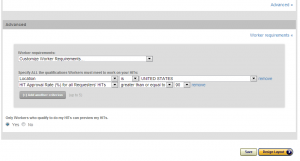

At the bottom of the page you'll see the Advanced link. This lets you pick exactly who can perform your HIT:

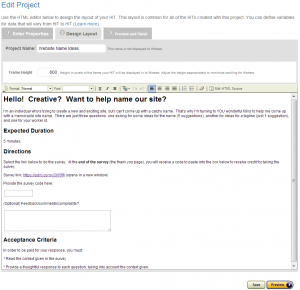

On the next page, you get to actually decide what to show users. This is basically just a blob of HTML. The template is really like 90% of the way there, but you can see how I changed it.

Note that I:

- used headers to break up the content,

- explained what was required from each Turk in the very first paragraph after a little hook,

- prominently displayed an expected duration, so Turks could quickly ascertain the value,

- provided concise directions,

- made the survey link pop open in a new window,

- provided a clear Acceptance Criteria to reassure Turks they'd get paid for their work, despite the fact that I'm a new requester.

In retrospect, I don't think the "survey code" was necessary -- every single response I got filled this in correctly, making me think perhaps having a non-public survey was sufficient obscurity to keep random people from straying onto the survey.

Any form elements you put directly in this HTML will get surfaced later in your HIT report as a pretty-printed column. If you have an input with name `survey_code`, you'll end up with a column in your data "Survey Code". You can do this for arbitrary form elements. Quite nice!

Now all we need is the actual survey! Off to aytm.com we go! (this works for any survey site of course, but I like aytm.com.)

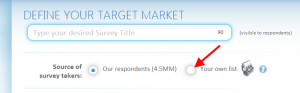

Create your survey

aytm.com ("ask your target market") is primarily for zeroing in on a potentially small niche market and querying them for questions. Only want to query people 45 and older? Or people with a 4-year degree? Or people in a particular state or county? You can fire off a survey to just that demographic, but it'll cost you to leverage aytm.com's userbase. On the other hand, if you bring your own users, all of their features are totally free.

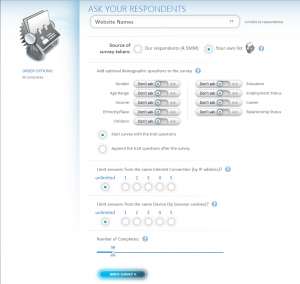

When you click the "Your own list" option, you get a different view. You can ask for demographic information if you really want to (warn people in the Turk project description if you do!), but I ultimately chose not to do this. The most interesting bit is you can change the number of Completes -- this should match whatever number you set in the Mechanical Turk project goal.

We're now ready to actually create the questions. Here's what I did:

You'll definitely want to put the main context of your question into the sidebar, so it'll be visible to remind users for the duration of the survey. You can then make the first question into "Instructional Text" to point it out to Turkers to make sure they see it. The next one or two questions be actual questions, e.g. provide some sample names. My only regret here is I didn't specify a format: some responses were comma-delimited, some were newline-delimited, some had actual numbering in front of each one. The ones that were easiest to process were actually the newline-delimited questions, so I'd recommend suggesting that.

Finally, make sure you ask for Turkers' Worker ID -- this allows you to correlate any bogus responses you get back to a Turker and reject that individual's submission, or reward Turkers for insightful suggestions.

If you do ask Turkers to provide a survey code in the HIT form, you can give it to them using the "Add custom message" functionality at the bottom of the page.

There's an "Edit Appearance" link in the sidebar that lets you style your survey -- this is straightforward and I don't really have any comment here, except to point out this is the only way to preview your survey in a way that actually renders the text in the "Enable optional custom message to be presented along the side of the survey" field.

Finally, on the last page you'll be given the chance to launch your survey, but first, copy the URL shown and update your Turk HIT page with it. Don't actually launch yet though, wait until the next section!

Launch Plan

Before I launched "for real", I wanted to do a trial run with a smaller number of people, so my first trial run had only 5 or so people in it. This allows you to see if Turks are able to complete the survey okay and how long it takes people to actually complete.

So for me, it was taking people a little under 4 minutes to finish. Great, so I'll round up to 5 minutes to let people know what they're getting into.

I make a couple small tweaks, and then relaunch the survey to 50 people:

Okay, so $6.22/hr is a little stingy, but at least the average time was under 5 minutes per assignment still -- so at least people knew it was stingy when they signed up.

Analyzing the Data

This part is a bit tedious, so maybe you can find a better way, but here's what I did. For each entry on the Turk result page

I looked up the Worker ID on the AYTM results page, which looked like this:

For each submission, I copied the name suggestions into the first column of an Excel doc and the Worker ID into the second column, 50 times (woot). As you do so, you can Approve and Reject submissions by Turkers, but be careful about Rejecting people -- if they feel they've been wrongfully Rejected, they'll let you know and reversing a rejection is really hard -- it can only be done using an API. So I spent an hour researching how best to do this and ended up writing a trivial Ruby script. Hurray! Point being, think twice before Rejecting a Turk.

After cleaning up the data (more pain), I ended up with around 220 candidate names. I was pretty trivially able to whittle this down to 50 (half the submissions weren't exactly what I was looking for), which I then passed around to friends and family to help me whittle it down to 10. This whole process is mostly on gut feeling; you might not be able to pick the best name out of the crowd, but you can pick the best 10 names out, probably, with a little help from people you know. Once you have 10 names you'd be okay with naming your site after, it's time for the next step.

Phase 2: Crowd-reduce!

Now we use one of the nicer features of AYTM: Reorder questions. Basically we'll be presenting viewers with the 7 finalists in random order, and they'll rank these finalists from favorite to least favorite. Here's what mine looked like:

Nothing too special here, except I'm using the Reorder question type (and I checked Randomize Answers, in the red box I added). I also added a cat picture, which a lot of Turkers seemed to like (most of the Feedback I got was about that). Lastly, you'll notice that you can actually only have people reorder 7 results; so I trimmed my 10 names down to 7.

I didn't do a trial run this time, since I assumed it'd still take about 5 minutes, but it actually only took people around 90 seconds. So everything balanced out, money/time-wise.

An exciting hour or two later (or possibly longer), your results will be in. And now for the most interesting part of the exercise!

Analyzing the Finale Results

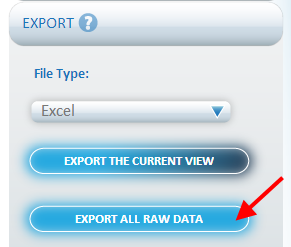

Once everything is finished and you're done Approving the Turk's submissions (just Approve All, this time), you'll need to export the results, preferably as an Excel doc. You can browse the results online, but I feel like it never answers my questions sufficiently. So Excel it is!

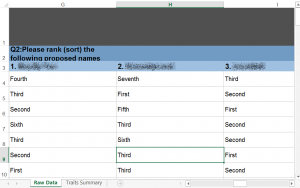

Here's what the Excel raw results look like:

Okay, cool, now what? It depends on exactly what question you want answered. For me, I wanted to know two things: which name had the highest average ranking, and which name was in the top 1/2/3 slots the most frequently.

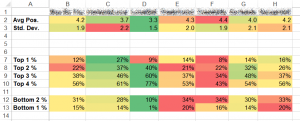

First you'll want to Search/Replace every occurrence of First/Second/Third with 1/2/3, respectively, in your raw data. Then you'll want to create a second Analytics worksheet with your names as columns and your formulas as rows. Here's what mine looked like, complete with Conditional Formatting:

I'll give you the formulas in a minute, hold on. But you can see the second and third column choices were pretty popular -- the third column option had an average position of 3.3 and was in the top 3 choices 60% of the time. It was also least likely to be ranked near the bottom. The second column option was a close second -- most popular in the first slot, but otherwise not great metrics (and the worst standard deviation). What follows is the formula for the first column:

- Avg. Pos.:

=AVERAGE('Raw Data'!G$4:G$740) - Std. Dev.:

=STDEV.S('Raw Data'!G$4:G$740) - Top 1 %:

=(COUNTIF('Raw Data'!G$4:G$740, "< 2"))/COUNT('Raw Data'!G$4:G$740) - Top 2 %:

=(COUNTIF('Raw Data'!G$4:G$740, "< 3"))/COUNT('Raw Data'!G$4:G$740) - Top 3 %:

=(COUNTIF('Raw Data'!G$4:G$740, "< 4"))/COUNT('Raw Data'!G$4:G$740) - Top 4 %:

=(COUNTIF('Raw Data'!G$4:G$740, "< 5"))/COUNT('Raw Data'!G$4:G$740) - Bottom 2 %:

=(COUNTIF('Raw Data'!G$4:G$740, "> 5"))/COUNT('Raw Data'!G$4:G$740) - Bottom 1 %:

=(COUNTIF('Raw Data'!G$4:G$740, "> 6"))/COUNT('Raw Data'!G$4:G$740)

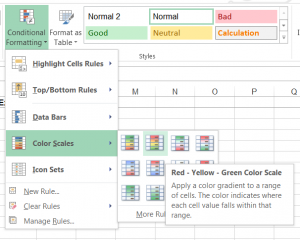

The conditional formatting is pretty easy; just select a row and then apply the formatting:

Ultimately, I went with the name in the second column -- it performed pretty well, and I thought it was more memorable. Still, using Mechanical Turk to come up with over 200 names and then narrow it down to two is still quite useful! Have to make the call yourself at the end of the day, though.

Conclusion

The overall experience was pretty exciting and informative. For a brief moment in time, you're hiring dozens of people to come up with exciting names for you -- more than any one person could come up with. It reminded me a lot of a part of Eden of the East...you'll know what I mean if you've seen it (p.s. see it).

Next time, I'll make sure to specify the format in which people should submit their responses, and I'd like to automate away the whittling of names from 200+ to 7 -- that seems like something me, the Terrible Naminator, should not have a part of. On the other hand, it guarantees that you don't end up with a top 7 that you don't like.

Find this article useful? Let me know in the comments!

[1]: http://www.softwarebyrob.com/ specifically

[2]: http://pyxlin.wordpress.com/2007/06/06/amazons-mechanical-turk-converts-26-of-surveys-into-solid-leads/